All computer scientists will have to learn how to write effective unit tests at some point in their (academic) career. Because of that, most computer science degrees teach their students how to do this in some form or another nowadays. Sometimes this is already done in introductory courses, to lay a good foundation, but often we see this is done in more advanced Software Development courses.

For this guide, I have researched the best ways to autograde student unit tests for Python assignments and will explain step by step how you can automatically assess pytest unit tests in CodeGrade. However, all theory and principles covered will also be very useful for achieving this in different programming languages and articles on different programming languages (e.g. Java’s JUnit) will follow soon. This blog will explain how to grade unit tests, click here to learn how to grade python code by using automated (unit) tests in CodeGrade.

Unit Test assessment metrics

There are multiple ways in which we can assess unit tests, two industry standard metrics will be covered in this guide: code coverage and mutation tests. Both serve different purposes and differ in complexity.

- Code Coverage is the most common metric to assess unit test quality. It very simply measures what percentage of source code is covered by the unit tests that you have written. Different metrics can be used to calculate this, for instance the percentage of subroutines or the percentage of instructions of the code that the tests cover.

- Mutation Testing is a more advanced metric to assess unit test quality, that goes beyond simple code coverage. During mutation testing, a tool makes minor changes to your code (known as mutations), that should break your code. It then checks whether a mutation makes your unit tests fail (this is what we want if our unit test is correct and complete) or not (meaning our unit test was not correct or incomplete).

You may now be wondering why we want to go as advanced as Mutation Testing to test our simple unit test assignment. The answer is that even though we do measure how many lines or instructions our unit tests cover with the Code Coverage metric, we do not know how well these lines are actually covered by our tests. Unit tests themselves are written code too, and can have bugs or typos in them or can miss to test certain edge cases. A bug or incompleteness in your unit test can be found very well using mutation testing, making it a very useful metric to assess the quality of unit tests for educational purposes. Of course, a combination of both metrics is even more effective and useful to assess your students!

Code Coverage Assessment for Python using pytest-cov

As it is by far the most easy to set up, we will start by assessing the student unit tests on Code Coverage in AutoTest. This can be very easily autograded as the resulting percentage can be simply converted to the points we give our students.

Pytest, one of the most common unit testing frameworks for Python and supported out of the box by CodeGrade, has a really nifty package to calculate code coverage called pytest-cov. We can install this in the Global Setup script together with installing cg-pytest using cg-pytest install pytest-cov. It is now very easy to run our student unit tests, while also reporting on their test coverage by using the unit test step in one of your AutoTest categories, by just adding `-- --cov=. --cov-report xml:cov.xml`to your usual command. This will generate a coverage report in xml format called `cov.xml`. Your final command for your unit test step will become:

-!- CODE language-console -!-cg-pytest run -- --cov=. --cov-report xml:cov.xml $FIXTURES/test_sudoku.py

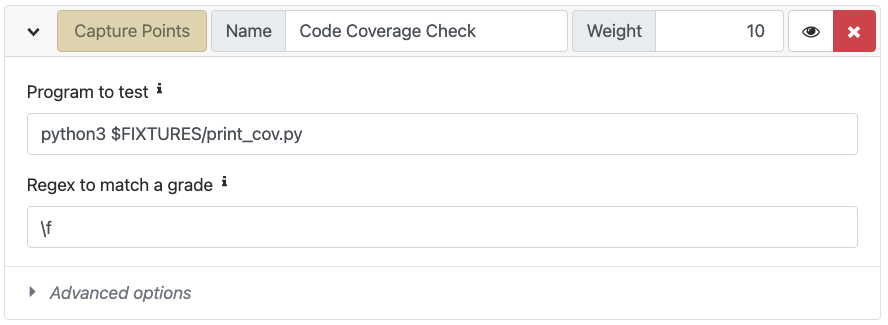

Now, all we have to do is parse the coverage report and use the percentage to give our students points. Luckily, CodeGrade’s Capture Points step is exactly what we need for this. I wrote a small script called `print_cov.py` and uploaded this as a fixture. Running this in the Capture Points step will print the coverage rate, which will then be used to calculate the number of points gotten for this test.

.png)